One of our key aims during this upgrade has been to minimise the period of coexistence between Exchange 2007 and Exchange 2010. This is because our testing phase had revealed a number of potential areas in which we could expect user dissatisfaction, at least up until we were able to migrate their mailboxes to the new servers. These potential issues included:

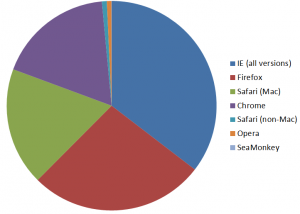

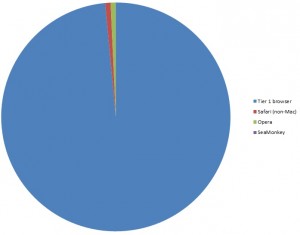

- OWA Double-authentication

In this scenario (non IE users) are asked to logon to Exchange 2010 OWA, are redirected to Exchange 2007 to find their mailbox, at which point they’re then asked to authenticate again. This is due to ISA presenting a cookie that only IE is happy to accept.

- Mac Mail reconfiguration

It seems that Mac Mail only uses Autodiscover during its initial set-up, so wouldn’t be redirected to the ‘legacy’ namespace during coexistence. Mac Mail would need to be reconfigured with a new URL at the start of coexistence and then back to the original one again once the mailbox had been migrated. This configuration data is held in a PLIST file and although it’s possible to be edited, it’s stored in a binary format that also contains user-specific values (so we couldn’t easily provide a downloadable version to do the reconfiguration for our users)

- Other EWS clients

Our UNIX population would potentially suffer the same need to reconfigure (twice) as Mac Mail users

- Outlook 2003

We initially expected problems here too (due to the product not being aware of Autodiscover).

Clearly the sensible approach is to minimise the amount of time spent in coexistence and avoid these issues completely. Our Project Board recently confirmed that this was the tack we should be aiming to follow. But other decisions we’d made along the way, such as sticking to the same namespace, while great for avoiding users having to reconfigure, are not so good if you want a ‘big bang’ migration. A lengthy period of coexistence seemed inevitable.

Figures showed that we could consistently achieve throughput figures in the region of 20GB/hr when migrating between the two systems. But with 25TB to move that would still leave us with those coexistence worries for far too long. Something had to give: we either needed a rethink to avoid (or at least mitigate) the coexistence problems or we’d have to find a way to make the migration happen faster.

A bit of digging revealed that we might be able to improve things on the latter. Data transfer was being throttled back by the Mailbox Replication Service (MRS). This runs on the Client Access Servers and effectively takes the effort of moving data off the mailbox servers. That’s good news for two reasons: you get faster mailbox servers and move requests no longer lock out the console during the task, as it used to.

However transferring the moving task to the CASs means that user connections could be affected by back-end mailbox move tasks taking up too much of the system’s resources. To ensure that the CASs are still able to serve user connections during mailbox moves the default MRS settings have therefore been set to pretty conservative values.

This makes sense in a production environment: client responsiveness is usually more important than a mailbox move. But since our servers aren’t going to be handling user requests just yet we don’t need quite so much caution. I therefore did some editing…

The file which controls the Mailbox Replication Service (MRS) is called MSExchangeMailboxReplication.exe.config and (on a default installation) you’ll find it here:

C:\Program Files\Microsoft\Exchange Server\V14\Bin

Right at the end of this file is the section that we’re interested in:

MaxMoveHistoryLength = “2”

MaxActiveMovesPerSourceMDB = “5”

MaxActiveMovesPerTargetMDB = “5”

MaxActiveMovesPerSourceServer = “50”

MaxActiveMovesPerTargetServer = “5”

MaxTotalMovesPerMRS = “100”

The values which had potential to affect users on the current servers were left alone (that’s MaxActiveMovesPerSourceMDB and MaxActiveMovesPerSourceServer). These values can range from zero to 100 and 1,000 respectively.

The MaxActiveMovesPerTargetMDB value was the setting I increased, first to 25, to gauge the effect. This setting is also on a zero to one hundred scale. I then tweaked MaxActiveMovesPerTargetServer to 25. This value goes up to 1,000 so represented a pretty cautious increase, just to see what kind of load it generated. Finally the MaxTotalMovesPerMRS value can be upped too. Depending on where you read it, this value tops out at either 1000 or 1024. Since the config file itself lists its ceiling as 1024, that’s the number I’ve assumed to be right. On that basis though, Microsoft’s technet seems to be quoting the erroneous value.

The ‘Microsoft Exchange Mailbox Replication’ service must be restarted for changes to take effect and of course the edits will need to be done on all of your CASs.

To allow migrations to be tested without impacting upon service I’ve been using the ‘suspendwhenreadytocomplete’ switch on the Powershell command. Essentially this copies over the bulk of the users’ mailboxes and then suspends the job just before it commits the change to Active Directory. If an autosuspended move is cancelle,d instead of being completed, the destination server’s data gets removed on the same cycle as for deleted mailboxes. These move requests won’t get removed automatically – even the successful ones – so if you’re planning on doing subsequent moves you’ll have to get into the habit of housekeeping…

Users are none the wiser about this background copying of their mailbox: their live data has remained exactly where it was. The other great feature of this ‘move and hold’ option is that you get a chance to find which mailboxes have corrupt content – those mailboxes will report as a failed move – again without affecting anyone’s service. If you’re an Outlook user, it’s pretty similar to the process by which Outlook creates an offlline copy of your mailbox (the OST file) at your desktop.

Once all of your data has been copied across, and all the mailboxes are showing as ‘automatically suspended’, completing the move only involves committing the changes to the directory and copying over the deltas (the changed content since that initial copy operation). In theory this could be months later – although your retention period might start deleting the suspended moves after a while. But even if that happened it doesn’t stop the final move from working: the normally-brief delta-copying phase will simply become another full mailbox copy.

This final stage is the only point at which users might notice a service impact (as the final commit briefly locks the user’s mailbox). Outlook users will be told ‘An administrator has made a change which requires you to close and restart Outlook’. OWA users will be told that their mailbox is being moved; other clients may find their program ‘gets confused’. This will therefore be the one part of the job where we need to keep our users and IT support staff well informed.

In theory this ‘move and hold’ option would allow us to migrate all 50,000 mailboxes in a much shorter coexistence window, but only if we can get the data across at a reasonable speed and if having this number of suspended moves didn’t break something. Nothing on the internet suggested that anyone had tried a ‘move and hold’ operation on the scale I was proposing…

This is off my usual subject matter but I’m so excited by this amazing little computer that I had to write something about it.

This is off my usual subject matter but I’m so excited by this amazing little computer that I had to write something about it.